Introduction

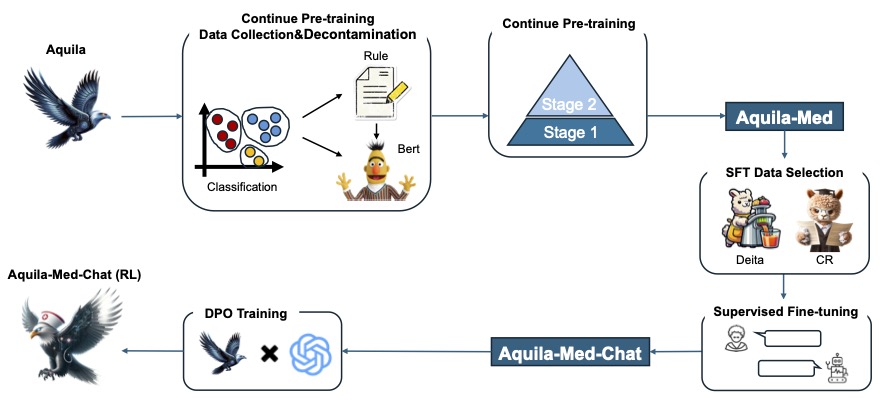

Aquila is a large language model independently developed by BAAI. Building upon the Aquila model, we continued pre-training, SFT (Supervised Fine-Tuning), and RL (Reinforcement Learning) through a multi-stage training process, ultimately resulting in the AquilaMed-RL model. This model possesses professional capabilities in the medical field and demonstrates a significant win rate when evaluated against annotated data using the GPT-4 model. The AquilaMed-RL model can perform medical triage, medication inquiries, and general Q&A. We will open-source the SFT data and RL data required for training the model. Additionally, we will release a technical report detailing our methods in developing the model for the medical field, thereby promoting the development of the open-source community. Besides we use the Qwen's tokenizer and template to train the insdutry model.

Model Details

The training process of the model is described as follows.

Dataset

we have released our supervised data, you can find the in huggingface

- SFT: https://huggingface.co/datasets/BAAI/AquilaMed-Instruct

- RL: https://huggingface.co/datasets/BAAI/AquilaMed-RL

Evaluation

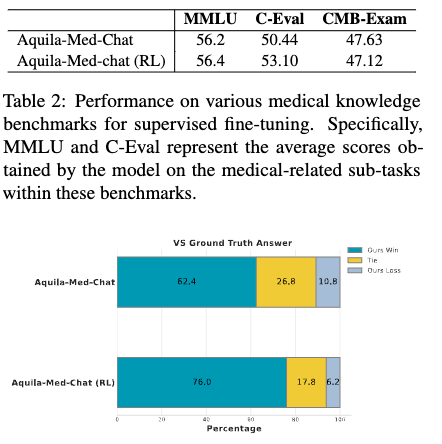

The subjective and objective scores are as follows。

subjective: Using GPT-4 for evaluation, the win rates of our model compared to the reference answers in the annotated validation dataset are as follows.

Objective:use MMLU / C-EVAL / CMB-exam to evaluate the model

usage

Once you have downloaded the model locally, you can use the following code for inference.

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, AutoConfig

model_dir = "xxx"

tokenizer = AutoTokenizer.from_pretrained(model_dir, trust_remote_code=True)

config = AutoConfig.from_pretrained(model_dir, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(

model_dir, config=config, trust_remote_code=True

)

model.cuda()

model.eval()

template = "<|im_start|>system\nYou are a helpful assistant in medical domain.<|im_end|>\n<|im_start|>user\n{question}<|im_end|>\n<|im_start|>assistant\n"

text = "我肚子疼怎么办?"

item_instruction = template.format(question=text)

inputs = tokenizer(item_instruction, return_tensors="pt").to("cuda")

input_ids = inputs["input_ids"]

prompt_length = len(input_ids[0])

generate_output = model.generate(

input_ids=input_ids, do_sample=False, max_length=1024, return_dict_in_generate=True

)

response_ids = generate_output.sequences[0][prompt_length:]

predicts = tokenizer.decode(

response_ids, skip_special_tokens=True, clean_up_tokenization_spaces=True

)

print("predict:", predicts)

"""

predict: 肚子疼可能是多种原因引起的,例如消化不良、胃炎、胃溃疡、胆囊炎、胰腺炎、肠道感染等。如果疼痛持续或加重,或者伴随有呕吐、腹泻、发热等症状,建议尽快就医。如果疼痛轻微,可以尝试以下方法缓解:

1. 饮食调整:避免油腻、辛辣、刺激性食物,多喝水,多吃易消化的食物,如米粥、面条、饼干等。

2. 休息:避免剧烈运动,保持充足的睡眠。

3. 热敷:用热水袋或毛巾敷在肚子上,可以缓解疼痛。

4. 药物:可以尝试一些非处方药,如布洛芬、阿司匹林等,但请务必在医生的指导下使用。

如果疼痛持续或加重,或者伴随有其他症状,建议尽快就医。

希望我的回答对您有所帮助。如果您还有其他问题,欢迎随时向我提问。

"""

License

Aquila series open-source model is licensed under BAAI Aquila Model Licence Agreement

Citation

If you find our work helpful, feel free to give us a cite.

@misc{zhao2024aquliamed,

title={Aqulia-Med LLM: Pioneering Full-Process Open-Source Medical Language Models},

author={Lulu Zhao and Weihao Zeng and Xiaofeng Shi and Hua Zhou and Donglin Hao and Yonghua Lin},

year={2024},

eprint={2406.12182},

archivePrefix={arXiv},

primaryClass={id='cs.CL' full_name='Computation and Language' is_active=True alt_name='cmp-lg' in_archive='cs' is_general=False description='Covers natural language processing. Roughly includes material in ACM Subject Class I.2.7. Note that work on artificial languages (programming languages, logics, formal systems) that does not explicitly address natural-language issues broadly construed (natural-language processing, computational linguistics, speech, text retrieval, etc.) is not appropriate for this area.'}

}

- Downloads last month

- 53